Robots need to know the reason why they are doing a job if they are to effectively and safely work alongside people in the near future. In simple terms, this means machines need to understand motive the way humans do, and not just perform tasks blindly, without context.

According to a new article by the National Centre for Nuclear Robotics, based at the University of Birmingham, this could herald a profound change for the world of robotics, but one that is necessary.

Lead author Dr Valerio Ortenzi, at the University of Birmingham, argues the shift in thinking will be necessary as economies embrace automation, connectivity and digitisation (‘Industry 4.0’) and levels of human – robot interaction, whether in factories or homes, increase dramatically.

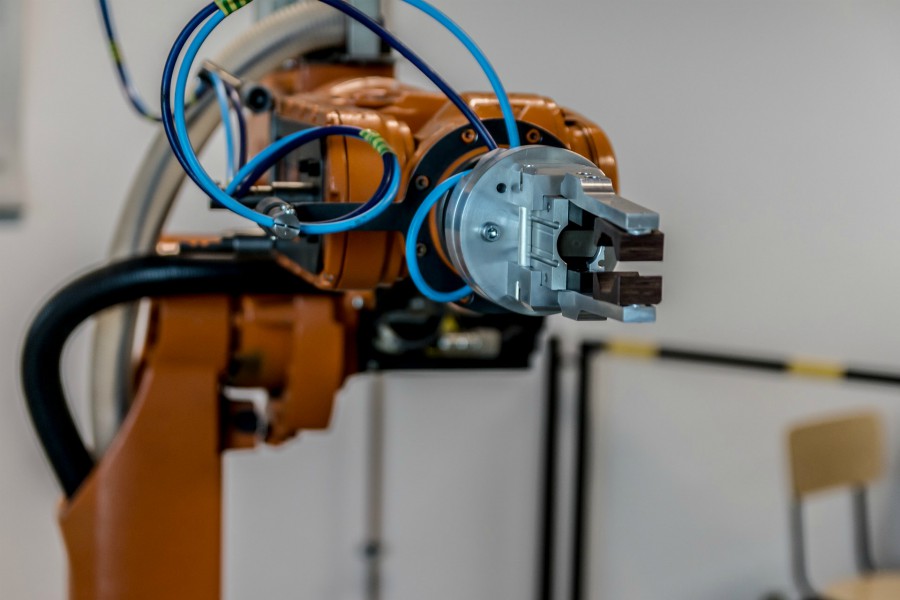

The paper, published in Nature Machine Intelligence, explores the issue of robots using objects. ‘Grasping’ is an action perfected long ago in nature but one which represents the cutting-edge of robotics research.

Most factory-based machines are ‘dumb’, blindly picking up familiar objects that appear in pre-determined places at just the right moment. Getting a machine to pick up unfamiliar objects,randomly presented, requires the seamless interaction of multiple, complex technologies. These include vision systems and advanced AI so the machine can see the target and determine its properties (for example, is it rigid or flexible?); and potentially, sensors in the gripper are required so the robot does not inadvertently crush an object it has been told to pick up.

Even when all this is accomplished, researchers in the National Centre for Nuclear Robotics highlighted a fundamental issue: what has traditionally counted as a ‘successful’ grasp for a robot might actually be a real-world failure, because the machine does not take into account what the goal is and whyit is picking an object up.

The paper cites the example of a robot in a factory picking up an object for delivery to a customer. It successfully executes the task, holding the package securely without causing damage. Unfortunately, the robot’s gripper obscures a crucial barcode, which means the object can’t be tracked and the firm has no idea if the item has been picked up or not; the whole delivery system breaks down because the robot does not know the consequences of holding a box the wrong way.

Dr Ortenzi gives other examples, involving robots working alongside people.

“Imagine asking a robot to pass you a screwdriver in a workshop. Based on current conventions the best way for a robot to pick up the tool is by the handle,” he said. “Unfortunately, that could mean that a hugely powerful machine then thrusts a potentially lethal blade towards you, at speed. Instead, the robot needs to know what the end goal is, i.e.,to pass the screwdriver safely to its human colleague, in order to rethink its actions.

“Another scenario envisages a robot passing a glass of water to a resident in a care home. It must ensure that it doesn’t drop the glass but also that water doesn’t spill over the recipient during the act of passing, or that the glass is presented in such a way that the person can take hold of it.

“What is obvious to humans has to be programmed into a machine and this requires a profoundly different approach. The traditional metrics used by researchers, over the past twenty years, to assess robotic manipulation, are not sufficient. In the most practical sense, robots need a new philosophy to get a grip.”

Professor Rustam Stolkin, NCNR Director, said, “National Centre for Nuclear Robotics is unique in working on practical problems with industry, while simultaneously generating the highest calibre of cutting-edge academic research – exemplified by this landmark paper.”

The research was carried out in collaboration with the Centre of Excellence for Robotic Vision at Queensland University of Technology, Australia, Scuola Superiore Sant’Anna, Italy, the German Aerospace Center (DLR), Germany, and the University of Pisa, Italy.

Editor’s Note: This article was republished from the University of Birmingham.

Leave a Reply

You must be logged in to post a comment.